ResearchMy work explores how intelligent robots can perceive, adapt, and act in the real world. I am particularly interested in enabling robots to interpret human intentions, respond in real time, and move with agility through unstructured environments—naturally, like living organisms. |

|

High DOF Tendon-Driven Soft Hand: A Modular System for Versatile and Dexterous Manipulation

Yeonwoo Jang, Hajun Lee, Junghyo Kim, Taerim Yoon, Yoonbyun Chai, Heejae Won, Sungjoon Choi Jiyun Kim IROS, 2025 project page We present a modular high-DOF tendon-driven soft finger and customizable soft hand system capable of diverse dexterous manipulation tasks. By integrating an all-in-one actuation module and enabling flexible finger arrangements, our design supports versatile, task-oriented soft robotic platforms.

|

|

Learning Steerable Imitation Controllers from Unstructured Animal Motions

Dongho Kang, Jin Cheng, Fatemeh Zargarbashi, Taerim Yoon, Sungjoon Choi, Stelian Coros arXiv, Under Review, 2025 project page / arXiv Our method enables terrain-aware motion retargeting and time-critical skills such as BackFlip and HopTurn from noisy inputs, including videos or physics-ignorant kinematic frames, and successfully deploys them on real robots.

|

|

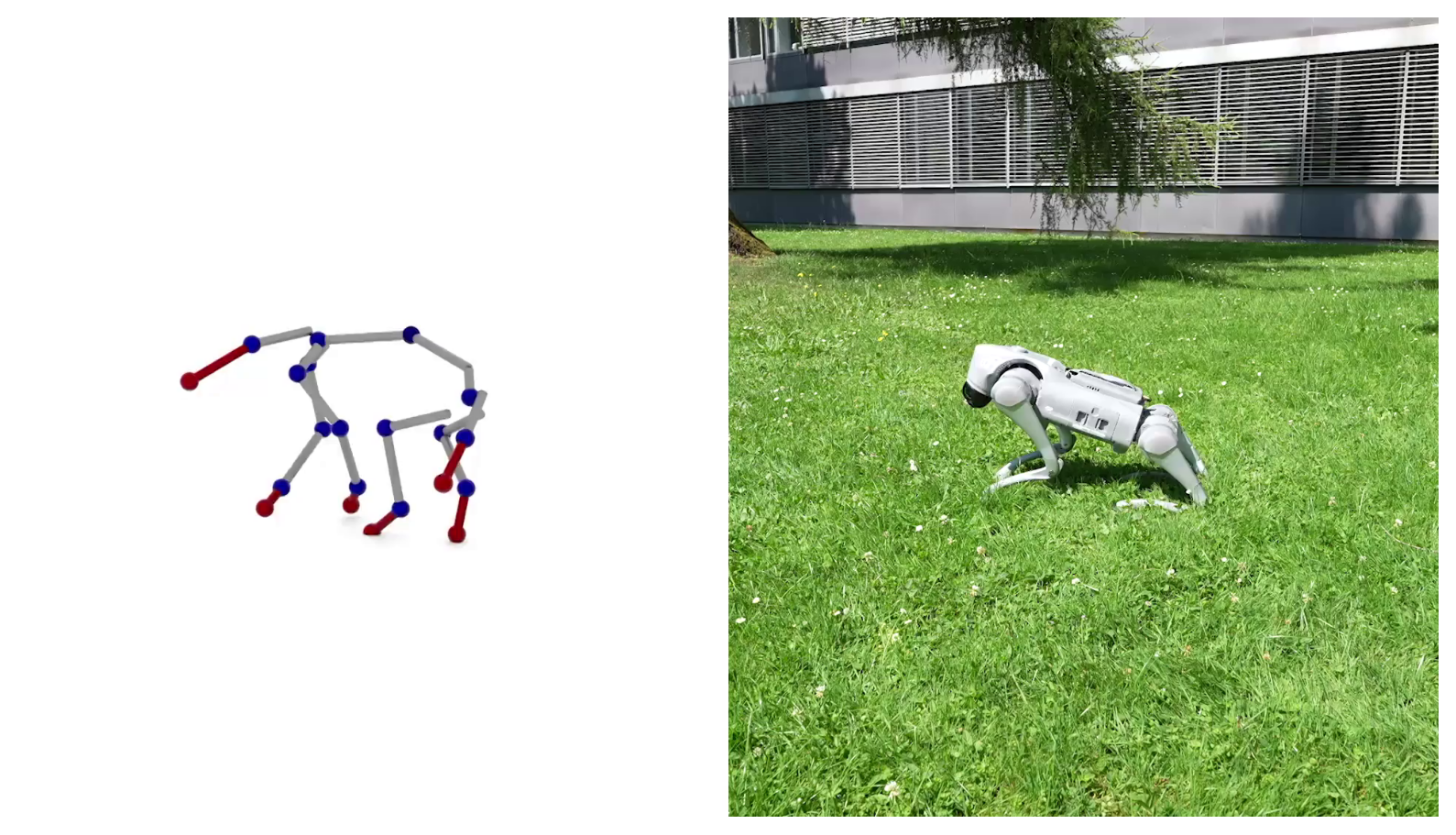

Spatio-Temporal Motion Retargeting for Quadruped Robots

Taerim Yoon, Dongho Kang, Seungmin Kim, Jin Cheng, Minsung Ahn, Stelian Coros, Sungjoon Choi Transactions on Robotics (TR-O), 2025 project page / arXiv Our method enables terrain-aware motion retargeting and time-critical skills such as BackFlip and HopTurn from noisy inputs, including videos or physics-ignorant kinematic frames, and successfully deploys them on real robots.

|

|

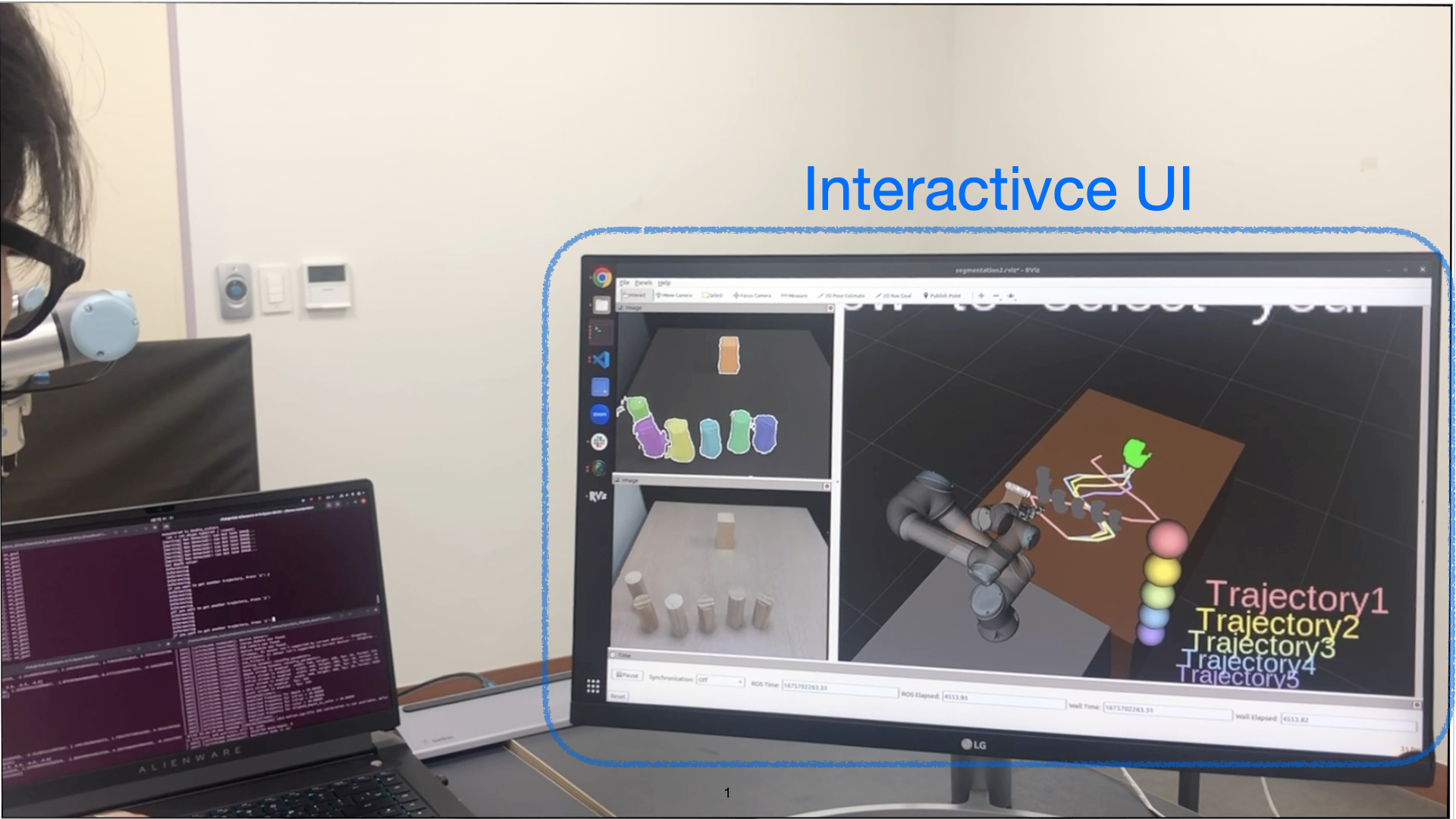

Quality-Diversity based Semi-Autonomous Teleoperation Using Reinforcement Learning

Sangbeom Park, Taerim Yoon, Joonhyung Lee, Sunghyun Park, Sungjoon Choi Neural Networks, 2024 project page / paper Our goal is to find the right level of autonomy between humans and robots. Fully autonomous control can reduce user controllability, while fully manual control can be burdensome. To address this, we propose a framework that allows the robot to suggest a diverse set of high-quality trajectory options, enabling the user to guide the robot more effectively.

|

|

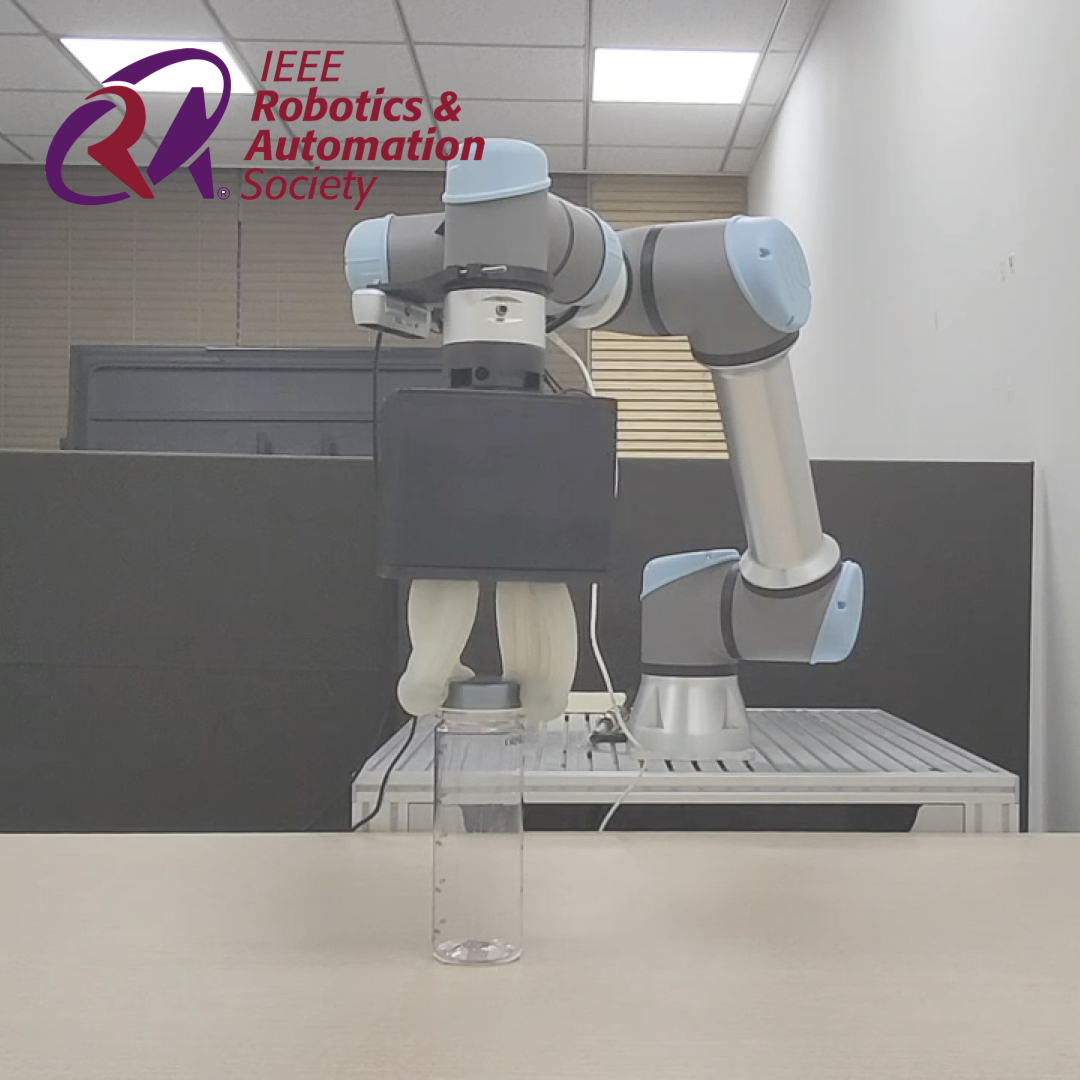

Kinematics-Informed Neural Networks: Enhancing Generalization Performance of Soft Robot Model Identification

Taerim Yoon, Yoonbyung Chai, Yeonwoo Jang, Hajun Lee, Junghyo Kim, Jaewoon Kim, Jiyun Ki, Sungjoon Choi Robotics Automation Letters (RA-L), 2024 project page / paper We propose a kinematics-informed neural network (KINN) that enables safe, dexterous, and unified control of hybrid rigid-soft robots by combining rigid body priors with data-driven learning.

|

|

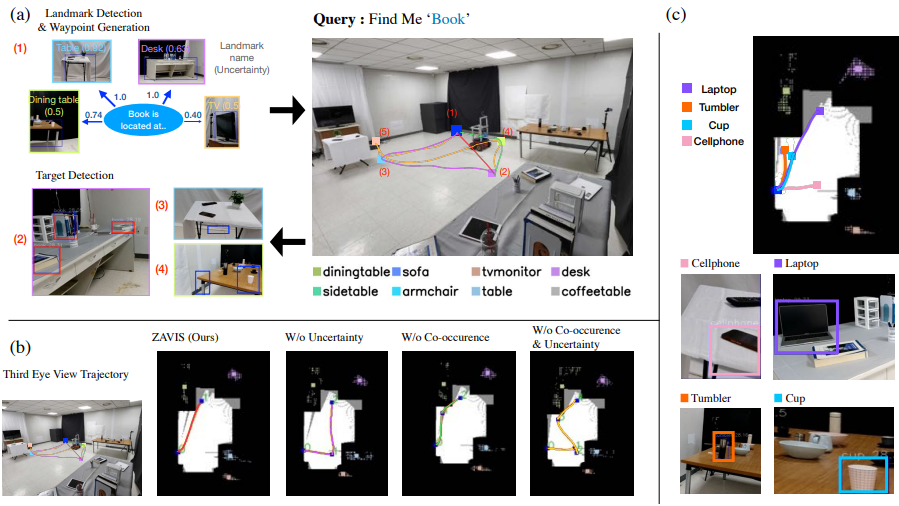

Zero-shot Active Visual Search (ZAVIS): Intelligent Object Search for Robotic Assistants

Jeongeun Park, Taerim Yoon, Jejoon Hong, Youngjae Yu, Matthew Pan, Sungjoon Choi ICRA, 2023 project page / paper / arXiv / video This work introduces Zero-shot Active Visual Search (ZAVIS), a system that enables a robot to search for user-specified objects using free-form text and a semantic map of landmarks. By leveraging commonsense co-occurrence and predictive uncertainty, ZAVIS improves search efficiency and outperforms prior methods in both simulated and real-world environments. |

ProjectsUnpublished, yet interesting projects that I have worked on. |

|

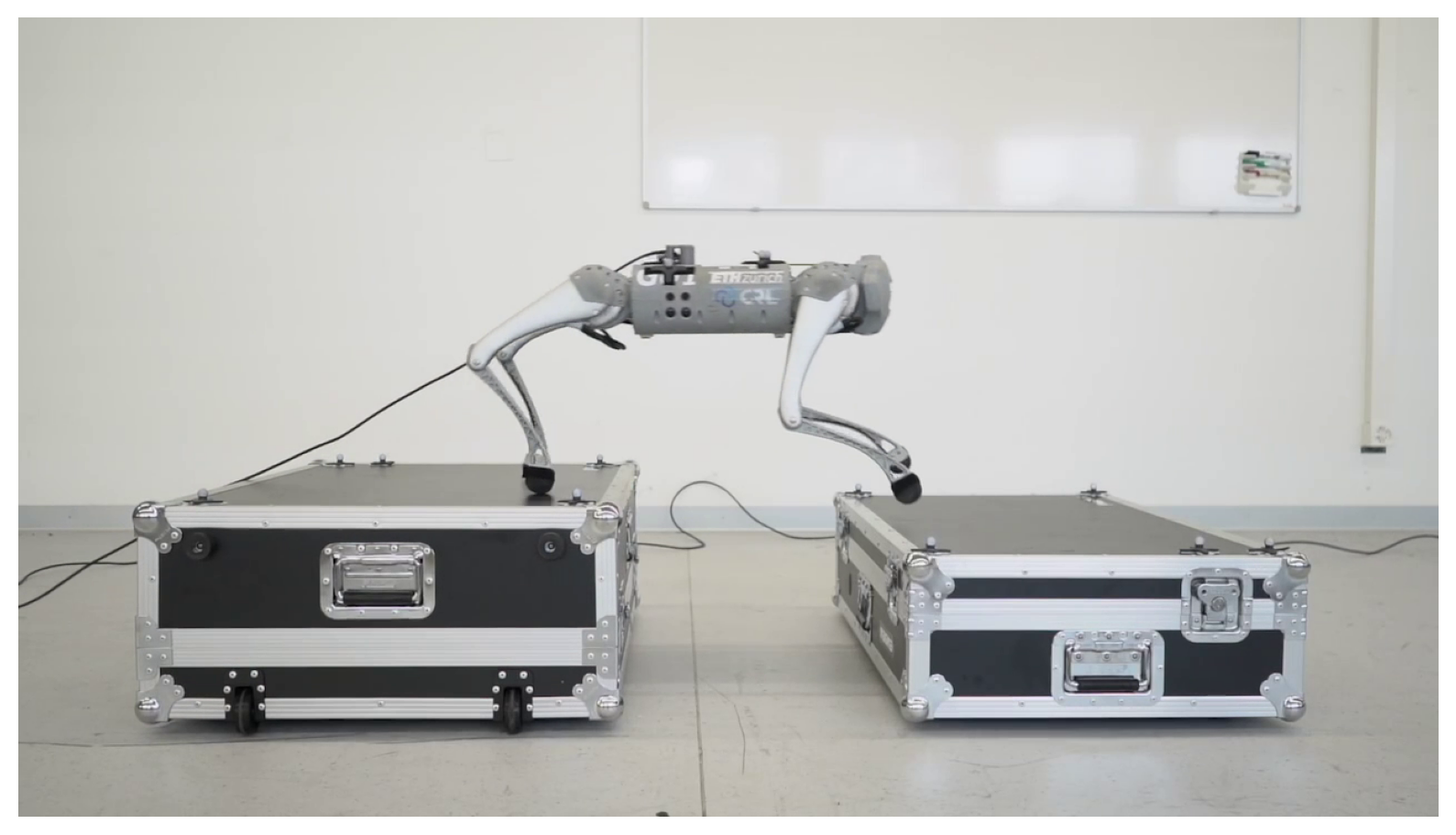

Project: Parkour

Taerim Yoon, Dongho Kang, Jin Cheng, Flavio De Vincenti, Hélène Stefanelli, Stelian Coros, Sungjoon Choi ETH Summer Fellowship, 2024 LinkedIn (Full Video) Using reinforcement learning, we train a quadruped robot to perform parkour skills such as jumping over and between obstacles. |